Ultimate Guide to Scaling APIs for Creator Platforms

How to build a futureproof relationship with AI

Scaling APIs is critical for creator platforms to handle traffic spikes, real-time interactions, and third-party integrations. Here's how to do it effectively:

Architectural Design: Use event-driven, modular designs to manage unpredictable traffic and real-time demands.

Performance Techniques: Employ caching, load balancing, and database optimizations to minimize delays and improve response times.

Advanced Scaling: Combine horizontal (adding servers) and vertical (upgrading hardware) scaling strategies for flexibility and efficiency.

Security and Compliance: Implement token-based authentication, rate limiting, and encryption to protect data and meet regulations.

Monitoring and Testing: Continuously test and monitor APIs to identify bottlenecks and maintain performance.

Quick Comparison: Scaling Techniques

Technique | Focus Area | Benefits |

|---|---|---|

Event-Driven Design | Architecture | Handles traffic spikes asynchronously |

Caching | Performance | Speeds up API responses |

Load Balancing | Traffic Distribution | Prevents server overload |

Database Optimization | Backend Performance | Reduces query times and improves scaling |

Horizontal Scaling | Infrastructure | Adds servers for increased capacity |

Vertical Scaling | Infrastructure | Upgrades individual server capabilities |

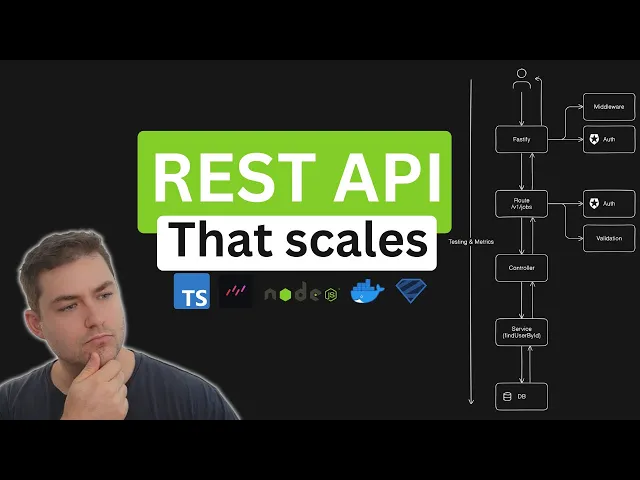

How I Build REST APIs that Scale

Building Scalable API Architectures

Creating APIs capable of meeting the demands of creator platforms requires careful architectural planning from the very beginning. The difference between a system that collapses during viral moments and one that thrives lies in the initial design decisions. A well-thought-out architecture doesn’t just accommodate more users - it ensures consistent performance even during sudden traffic surges. It’s no surprise that 82% of developers consider scalability a top priority in API design.

To handle unpredictable spikes in traffic and support real-time interactions, a robust architecture is essential. Let’s dive into how modular, event-driven designs tackle these challenges.

Modular and Event-Driven API Design

Traditional synchronous models often falter under heavy loads, making event-driven designs a better alternative. Event-driven architecture allows components to function independently by relying on asynchronous events.

This modular approach brings clear advantages. For example, businesses adopting event-driven methodologies have reported up to a 30% improvement in operational efficiency. Systems that embrace decoupling adapt to new requirements 50% faster. Additionally, companies using microservices within event-driven frameworks have seen a 30% reduction in time-to-market for new features. For creator platforms, this adaptability translates to quicker feature rollouts and a smoother user experience.

Take live streaming as an example: when a fan joins a session, separate processes handle authentication, video streaming, chat, and analytics independently. This separation ensures that a failure in one service doesn’t disrupt the entire experience.

Stanislav Khilobochenko from Clario shared his experience on the effectiveness of this approach:

"Shifting from a request-response model to an event-driven architecture completely changed how my API handled traffic spikes. Instead of overwhelming the system with synchronous processing, message queues like Kafka helped distribute the load more efficiently. This allowed background tasks to run asynchronously, keeping response times fast even during peak usage."

Here’s a quick comparison of synchronous and event-driven models:

Aspect | Synchronous Model | Event-Driven Architecture |

|---|---|---|

Communication Style | Synchronous, blocking | Asynchronous, non-blocking |

Coupling | Tight coupling between services | Loose coupling via event channels |

Scaling Pattern | Entire system scales together | Services scale independently based on load |

Failure Handling | Failures often cascade | Failures are contained; events can be replayed |

State Management | Maintained in databases | Recreated from event streams |

Development Flexibility | Coordinated deployments needed | Independent service evolution |

By incorporating message brokers, systems can process up to 40% more transactions without sacrificing performance. This makes event-driven architecture a key strategy for platforms preparing for rapid growth.

Asynchronous Operations for Better Performance

Asynchronous programming is another game-changer when it comes to handling resource-heavy tasks like video uploads or AI processing. By minimizing wait times, asynchronous operations can improve response speeds by up to 30%.

For example, when TwinTone processes video call requests, its system handles resource allocation, participant authentication, and AI model initialization asynchronously. This ensures users get immediate feedback while background processes handle the heavy lifting. Tools like Kafka distribute the workload efficiently, while background workers manage tasks like video encoding or AI inference without affecting user-facing APIs. Asynchronous database operations, such as batching writes, further reduce system strain during high-traffic periods. Additionally, features like automatic retries, dead-letter queues, and circuit breakers enhance error handling, making the system more resilient under sudden demand.

By leveraging asynchronous operations, platforms can boost performance and maintain agility in their integrations.

API-First Development Practices

API-first development treats APIs as fully realized products rather than afterthoughts. This approach is critical for creator platforms that need to integrate with numerous third-party services while staying flexible for future growth. According to research, 51% of developers say more than half of their organization’s development efforts are focused on APIs. Additionally, 75% of respondents reported happier developers and faster product launches.

"As the connective tissue linking ecosystems of technologies and organizations, APIs allow businesses to monetize data, forge profitable partnerships, and open new pathways for innovation and growth." – McKinsey Digital

API-first development begins with collaboration among product managers, designers, and business stakeholders to define clear API specifications before coding starts. This ensures APIs meet real business needs and establish a clear “contract” between internal and external users. Comprehensive documentation is crucial, covering endpoint details, sample requests and responses, authentication methods, error handling, and rate-limiting policies. Mock APIs enable frontend and backend teams to work simultaneously, reducing development time and catching issues early. Clear versioning strategies also ensure new features integrate smoothly without disrupting existing functionality.

Core Techniques for Scaling APIs

Once your API architecture is set up, the next step is ensuring it can handle traffic surges without breaking a sweat. By applying these key techniques, you can maintain performance, even during unpredictable spikes, and deliver a seamless experience for users.

Monitoring and Load Testing

Keeping your API running smoothly starts with monitoring and load testing. These steps help pinpoint bottlenecks, optimize resources, and ensure scalability under different conditions. With API usage having grown more than 300% over the past five years, understanding your system’s limits before issues arise is more important than ever.

Effective load testing means simulating real-world usage. For creator platforms, this could involve mimicking scenarios like simultaneous video calls, chat interactions, or heavy content uploads during peak times. Testing like this isn’t a one-and-done task - it’s a cycle: test, analyze, refine, and repeat. Start by establishing a performance baseline under normal conditions, then gradually increase the load to identify thresholds and breaking points.

Key metrics to watch include CPU usage, memory consumption, network I/O, response times, throughput, and error rates. When done right, load testing can improve issue detection accuracy by up to 40%. Integrating this process into CI/CD pipelines ensures performance issues are caught early in development. Armed with these insights, you can fine-tune caching and load balancing strategies to minimize bottlenecks.

Caching and Load Balancing

Did you know that a delay as small as 100 milliseconds can reduce sales by 1%? That’s why caching and load balancing are essential for creator platforms, where user engagement directly impacts revenue.

Caching stores frequently accessed data so it doesn’t have to be fetched repeatedly. For example, user profiles, content metadata, or popular video thumbnails can be cached to speed up API responses. Caching can happen at different levels - on the client side, server side, or through intermediary proxies.

"Caching is one of the best ways to speed up your APIs and keep users engaged."

– Adrian Machado, Engineer, Zuplo

In-memory caching systems like Redis and Memcached offer lightning-fast data retrieval, though persistence options should be enabled to avoid data loss during server restarts.

Load balancing, on the other hand, spreads incoming requests across multiple servers to prevent any single server from being overwhelmed. By doing so, it boosts availability, scalability, and overall performance. Different algorithms cater to different needs: Round Robin works well for uniform setups, while the Least Connections method excels when request durations vary. Weighted load balancing is ideal for servers with differing capabilities, directing more traffic to the stronger machines.

"Load balancing isn't just a fancy term – it's the secret sauce that transforms sluggish APIs into speed demons."

– Nate Totten, Co-founder & CTO, Zuplo

Health checks ensure traffic is routed only to functioning servers, and redundant load balancers eliminate single points of failure. By combining caching and load balancing, you can tackle front-end performance issues, while database optimization takes care of the back end.

Database Optimization for Scale

When APIs hit their limits, the database is often the culprit, especially for platforms managing large amounts of user data, content metadata, and interaction history.

Start with query optimization. Tools like EXPLAIN can uncover inefficiencies, helping you refine query structures and make better use of indexes. For instance, optimizing fetch operations for user content libraries or engagement metrics can significantly reduce computation time.

Indexing is another game-changer. B-tree indexes are great for range queries, Hash indexes work best for exact matches, and Bitmap indexes handle low-cardinality data efficiently. Regular maintenance keeps these indexes performing at their best.

"Scaling APIs isn't just about adding more servers; it's about designing systems that can grow while staying reliable and efficient."

– Dileep Kumar Pandiya, Principal Engineer, ZoomInfo

For more extensive scaling, horizontal scaling through sharding distributes data across multiple nodes, reducing the load on individual servers. Shards can be organized by criteria like creator ID or geographic region, making data handling more efficient.

Connection pooling minimizes the overhead of establishing new database connections, while read replicas spread read operations across multiple instances, easing the burden on the primary database. These strategies are particularly useful for platforms delivering content feeds or displaying user profiles.

Caching also plays a role here; using systems like Redis or Memcached to store frequently accessed data in memory can lighten the database load and improve response times. For large datasets, cursor-based pagination is a better choice than offset pagination, as it avoids performance slowdowns with higher page numbers.

Finally, continuous monitoring is essential. Keep an eye on metrics like query latency, throughput, and error rates using dashboards and alerts to catch problems before they impact users. By staying vigilant, you can ensure your database remains efficient and scalable.

Advanced Scaling Solutions for High-Traffic Platforms

When you're dealing with millions of requests or experiencing rapid growth, basic scaling methods just won't cut it. To handle traffic surges while keeping performance and reliability intact, advanced strategies are a must. These are the tools that separate platforms that thrive under pressure from those that crumble.

Building on core practices like monitoring, caching, and database tuning, these advanced techniques are designed for the unique challenges of high-traffic scenarios.

Horizontal vs. Vertical Scaling Strategies

Scaling strategies are at the heart of managing growth effectively, and understanding the difference between horizontal and vertical scaling is key to making the right infrastructure choices. Think of it this way: horizontal scaling is like adding more lanes to a highway, while vertical scaling is like upgrading the bridge itself to handle more weight.

Horizontal scaling involves adding more machines or nodes to your system to spread the workload. This approach enhances load distribution, offers flexibility to scale when needed, and improves fault tolerance. For example, a creator platform might spin up additional servers during a major live event or viral moment. If one server goes down, others keep things running, and scaling can happen incrementally based on demand. However, horizontal scaling comes with its own set of challenges, like managing load balancing, ensuring data consistency, and dealing with potential scalability limits as the system grows.

Vertical scaling, on the other hand, is all about upgrading the power of individual machines by adding more CPU, RAM, or faster network capabilities. This method is great for boosting processing power and simplifying resource management for specific workloads. For instance, a platform might upgrade its video processing servers to handle 4K streams more efficiently. The downside? Vertical scaling has a ceiling due to hardware limits, creates single points of failure, and can involve complex upgrade processes.

Scaling Type | Best For | Challenges | Example for Creator Platforms |

|---|---|---|---|

Horizontal | Handling variable traffic, fault tolerance | Load balancing, data consistency issues | Adding servers during live streaming events |

Vertical | Compute-heavy tasks, simpler management | Hardware limits, single points of failure | Upgrading video encoding hardware |

Take Airbnb as an example. It started with vertical scaling by upgrading its AWS EC2 instances but later adopted horizontal scaling, distributing workloads across multiple nodes and regions. Many platforms also use a hybrid approach, combining both strategies for maximum flexibility.

These scaling methods set the foundation for managing API traffic, a challenge that can be further tackled with the help of API gateways.

API Gateway Implementation

An API gateway acts as the central hub for managing your platform's API traffic. It controls how requests are routed to backend services, protects against overload, and ensures smooth operations through techniques like rate limiting and throttling. Rate limiting caps the number of requests a client can make within a specific timeframe, while throttling slows request processing when thresholds are reached. Together, these features shield your services from denial-of-service attacks and prevent bottlenecks.

For instance, Kong Gateway is capable of handling over 50,000 transactions per second per node, making it a strong choice for high-traffic platforms. Beyond performance, API gateways offer built-in analytics to monitor request rates, errors, response times, and data usage. They also support load balancing, smart routing based on user attributes, and caching frequently accessed responses to reduce server strain. Additionally, they can transform data on the fly, which is helpful for aggregating information, changing formats, or normalizing error messages.

For creator platforms, this means smoother video uploads, efficient chat message routing, and secure user authentication. Features like auto-scaling allow API gateways to dynamically adjust resources based on demand, while service discovery ensures routing updates automatically as new services are added.

While API gateways are essential, they work even better when paired with modern architectures like microservices and reverse proxies.

Microservices and Reverse Proxies

Shifting from a monolithic structure to a microservices architecture is a game-changer for scaling rapidly growing platforms. This approach allows different components of your platform to scale independently, making it easier to handle surges in specific areas. Reverse proxies play a key role here, functioning much like API gateways by routing requests to the right microservices based on criteria like URL paths.

Netflix is a prime example of this approach, using an API gateway to manage thousands of microservices. Similarly, Amazon relies on a microservices architecture to handle tasks like authentication, rate limiting, and traffic control across its vast ecosystem. Another example is Criteo, which scaled its ad-serving platform by adopting microservices and distributing workloads across thousands of servers worldwide. For creator platforms, this could mean separate microservices for user accounts, content processing, payments, and analytics.

It's important to distinguish between API gateways and reverse proxies. While both handle traffic routing, API gateways specialize in API management with features like authentication, rate limiting, and versioning. Reverse proxies, on the other hand, offer broader capabilities, including load balancing, SSL offloading, caching, and additional security measures. For instance, a reverse proxy might handle SSL encryption while an API gateway enforces security policies and manages request flows.

Integrating tools like Kafka, RabbitMQ, or Redis can further enhance this setup by ensuring reliable communication between microservices. This allows platforms to scale individual services independently. For example, your video processing service can scale up during peak upload times, while your user authentication service remains steady, leading to more efficient resource use and better overall performance.

Security and Compliance at Scale

Managing thousands of users and payments requires a strong focus on security to maintain trust and ensure smooth operations. As APIs scale to support high-traffic platforms for creators, balancing security, compliance, and performance becomes critical. The challenge lies in safeguarding data, maintaining efficiency, and adhering to regulations across multiple regions - all while staying ahead of new security threats. These measures work hand in hand with the performance and scalability strategies previously discussed.

Authentication, Authorization, and Rate Limiting

When operating at scale, basic username-password setups don’t cut it. Token-based authentication, particularly with JSON Web Tokens (JWT), has become a go-to approach for stateless RESTful APIs. OAuth 2.0 provides a secure way for users or applications to access resources without needing to share credentials. Combined with OpenID Connect (OIDC), it adds an identity layer that’s invaluable for platforms like TwinTone, where creators manage digital twins and interact with fans. Fine-grained access control is essential here - JWT claims allow for highly specific API permissions, going beyond traditional Role-Based Access Control (RBAC) as the platform grows.

Rate limiting acts as a frontline defense against misuse and attacks. It prevents individual clients from overwhelming the system with excessive requests, reducing the risk of overload or denial-of-service incidents. When rate limits are exceeded, APIs should return a 429 status code and include limit details in the HTTP response headers. Algorithms like token bucket or leaky bucket can handle sudden traffic spikes effectively. Adding multi-factor authentication (MFA), regular token rotation, and detailed logging strengthens security further, making it easier to detect and prevent potential misuse.

Beyond controlling access, safeguarding sensitive data and meeting compliance standards are just as important.

Data Protection and Compliance

Creator platforms face unique challenges, managing both personal data and financial transactions. Regulations like GDPR, CCPA, PCI DSS, SOC 2, and ISO 27001 demand strict adherence to data protection standards. Failure to comply can lead to data breaches, hefty fines, operational setbacks, and reputational harm. In 2023, nearly 70% of financial services and insurance companies experienced delays due to API security concerns, with 92% reporting production API security issues and one in five suffering a full-scale breach.

Encryption plays a key role in protecting data. Using methods like AES-256 for data at rest and TLS 1.2/1.3 for data in transit ensures sensitive information remains secure, even if intercepted. Adopting a privacy-by-design approach - such as collecting only essential data, offering clear management options, and scaling privacy controls alongside growth - helps maintain compliance. For example, TwinTone must ensure that its digital twin data is secure and meets international standards.

A zero trust architecture further strengthens security by requiring HTTPS for all API traffic and verifying every request, no matter its origin. API keys, while simple, should be treated like passwords and rotated regularly. For higher-risk operations, OAuth scopes can limit token capabilities, minimizing potential damage if a token is compromised.

Maintaining these safeguards requires ongoing testing and monitoring.

Security Testing and Monitoring

Effective security is an ongoing process, relying on continuous monitoring and regular testing. Keeping a detailed inventory of all internal and external APIs, along with consistent vulnerability monitoring, is essential as platforms integrate with third-party services. Automated alerts can quickly flag unusual activity, such as unexpected traffic or suspicious login attempts.

Regular security assessments are crucial. Internal audits, vulnerability scans, and third-party penetration tests help identify vulnerabilities before attackers can exploit them. Comprehensive logging - covering authentication events, rate limiting actions, and other API activities - helps detect abnormal patterns while respecting user privacy. Additionally, enforcing strict input validation at every endpoint protects against injection attacks by ensuring incoming data is properly formatted and within expected value ranges.

"I like to think of governance as controlling the controlling. So, it's high-level thinking about what are we dealing with regarding regulation, ethics, business strategy, and aligning all those things into a policy." – Rob van der Veer, Creator of OWASP AI Exchange

Real-time monitoring tools and alerts are vital for responding swiftly to threats. This is especially important for platforms with real-time interactions between creators and fans, where security issues could immediately disrupt user experience and revenue. Ultimately, maintaining security and compliance at scale is an ongoing effort, evolving alongside your platform to meet new challenges while supporting growth and performance.

Case Study: How TwinTone Scaled Its API Infrastructure

TwinTone’s journey to support over 2,000 creators and major brands is a compelling example of tackling the challenges involved in scaling API infrastructure for AI-driven platforms. With unique demands like real-time video calls, live streaming, and AI-generated content in over 30 languages, the platform required a tailored approach to handle its growth and maintain a seamless user experience.

Initial Challenges and Architecture

When TwinTone launched, it faced the common growing pains of a creator-focused platform. Its early architecture struggled under the weight of increasing demands, with several critical issues threatening its ability to scale effectively.

The most pressing problem was latency in cross-border data transfers. With creators and fans spread across the globe, the lack of region-specific optimizations led to latency rates up to 50% higher than acceptable levels. This was particularly detrimental for core features like AI-powered video calls and live streaming, where even small delays could disrupt the illusion of real-time interaction.

TwinTone’s requirements for AI processing, real-time engagement, and multilingual support exposed the limitations of standard multicloud infrastructures. These challenges not only increased operational complexity but also made it difficult to deliver a consistent experience across regions. Harman Singh, Senior Software Engineer at StudioLabs, highlighted the importance of anticipating bottlenecks:

"I've been responsible for managing APIs that serve millions of users daily. We've learned that it's crucial to continuously monitor our API performance and anticipate potential bottlenecks before they occur".

To address these hurdles, TwinTone needed to completely rethink its infrastructure and adopt innovative scaling strategies.

Implementation: Scaling Strategies and Solutions

TwinTone focused on three key areas to transform its API infrastructure: event-driven architecture, custom infrastructure, and proactive monitoring. These changes were critical to handling traffic spikes and the platform’s AI processing demands.

The first step was moving from a traditional request-response model to an event-driven architecture. This shift allowed TwinTone to manage traffic spikes more effectively by using message queues like Kafka to distribute processing loads. Stanislav Khilobochenko, VP of Customer Services at Clario, shared a similar experience:

"Shifting from a request-response model to an event-driven architecture completely changed how my API handled traffic spikes. Instead of overwhelming the system with synchronous processing, message queues like Kafka helped distribute the load more efficiently".

For TwinTone, this meant redesigning how its AI digital twins processed fan interactions, ensuring no single server was overwhelmed.

The second major change involved building custom infrastructure tailored to the platform’s unique needs. Instead of relying solely on standard cloud solutions, TwinTone developed systems capable of handling AI video processing, real-time streaming, and multilingual content generation. This approach allowed the platform to address challenges that off-the-shelf infrastructure couldn’t handle, creating a more efficient and scalable system.

Finally, TwinTone implemented robust API gateway solutions to centralize control and balance traffic across its diverse services. These gateways played a critical role in managing everything from AI processing for digital twins to payment systems for creators, who retain 100% of their revenue on the platform.

Results and Lessons Learned

The infrastructure overhaul delivered measurable improvements and valuable insights. The most notable success was the reduction of cross-border latency through region-specific optimizations. This upgrade significantly enhanced the quality of AI video calls and live streaming for users worldwide while maintaining support for over 30 languages.

TwinTone’s emphasis on proactive monitoring and load testing proved essential. As Sergiy Fitsak, Managing Director at Softjourn, explained:

"One of the biggest lessons learned when scaling APIs to handle increased traffic is that scalability isn't just about adding more servers - it requires optimizing architecture, caching, and load balancing from the start".

This mindset helped TwinTone avoid common pitfalls and ensured its infrastructure could handle complex demands.

A standout moment came during an autonomous test where the platform’s AI Agent successfully placed a Domino’s pizza order. James Rowdy commented on the significance of this achievement:

"This experiment shows how AI can handle real-world tasks - efficiently and autonomously. As TwinTone continues to evolve, we're seeing the boundaries blur between what we trust humans to do and what we can entrust machines to do".

TwinTone’s current architecture supports a range of user needs, from the $99/month Creator Plan to the enterprise-level "Mr Beast Mode" with custom pricing. This scalability underscores the importance of building flexibility into API design from the start.

The experience also highlighted the value of continuous monitoring and analytics. By tracking performance across key features like video calls, live streaming, and content generation, TwinTone could identify and address issues early, ensuring creators’ revenue streams weren’t disrupted. For a platform where downtime directly impacts earnings, this capability is indispensable.

Conclusion: Planning for Long-Term API Growth

Scaling APIs for creator platforms demands ongoing planning and foresight. With 75% of IT professionals emphasizing the importance of scalable design and more than 90% of developers actively using APIs, early architectural choices play a critical role in long-term success. On average, enterprises manage over 613 API endpoints in production, highlighting the need for strategic planning at every stage.

Once immediate scalability issues are addressed, the focus shifts to ensuring long-term resilience. Key strategies like event-driven architectures, strategic caching, and asynchronous processing lay the groundwork for systems that can handle sustained growth. As Dileep Kumar Pandiya, Principal Engineer at ZoomInfo, points out:

"One of the most important lessons I have learned in scaling APIs is that it's not only about handling more traffic, it's more about keeping systems reliable, efficient, and cost-effective".

These strategies deliver measurable performance improvements. For example, optimizing caching can reduce response times by up to 70%. Meanwhile, modular system designs allow platforms to scale up to 70% faster than monolithic systems, and horizontally scaled architectures can manage up to 10 times more traffic without sacrificing performance.

Beyond these technical gains, continuous monitoring and fine-tuning are what set thriving platforms apart. Take TwinTone's platform as an example - it supports real-time AI video calls and live streaming in over 30 languages while ensuring creators keep 100% of their earnings. This success stems from proactive infrastructure management, such as reducing cross-border latency and implementing robust API gateways.

Long-term planning also requires recognizing that API optimization is an ongoing process. As platforms evolve, they must continually refine database queries, apply intelligent rate limiting, and integrate technologies like CDNs and load balancers. These efforts ensure systems remain responsive, secure, and cost-efficient as they scale.

Treating API architecture as a strategic asset can be a game-changer. Platforms that invest in resilient, flexible systems early on are better equipped to seize growth opportunities and avoid being held back by technical bottlenecks. This proactive approach not only positions platforms to scale smoothly but also provides a competitive edge in the fast-paced creator economy.

FAQs

What are the advantages of using event-driven architecture to scale APIs for creator platforms?

Event-driven architecture (EDA) is a powerful approach for scaling APIs on creator platforms, offering scalability, flexibility, and resilience. By breaking down components into smaller, independent pieces, EDA allows systems to handle sudden spikes in user activity more efficiently. This means you can expand specific parts of the system as needed without disrupting the rest, ensuring smooth performance even during peak demand.

Another major advantage of EDA is its ability to enhance real-time responsiveness. This is especially important for features like live streaming or interactive content, where applications need to react instantly to events. The system's design ensures a seamless experience for users, keeping them engaged. On top of that, the loosely connected components improve fault tolerance - if one part encounters an issue, the rest of the system keeps running without a hitch. By adopting EDA, creator platforms can build APIs that are better equipped to handle the demands of a growing and active user base.

How does API-first development improve scalability and integration for creator platforms?

API-first development prioritizes APIs as a core element of the software design process, making it easier to scale and integrate creator platforms. This method enables development teams to tackle different components simultaneously, cutting down on delays and accelerating the release of new features.

By establishing clear API contracts early on, platforms can connect seamlessly with third-party tools and services, minimizing compatibility issues and integration headaches. Plus, API-first practices emphasize adaptability and reusability, allowing platforms to adjust to market shifts and handle increasing user activity more effectively. The result? Faster development timelines, improved scalability, and a smoother experience for both creators and developers.

What are the best ways to secure and ensure compliance for APIs as your creator platform grows?

To keep your APIs protected and aligned with security standards as your platform grows, start with an API gateway. An API gateway acts as a hub for managing traffic, enforcing security protocols like rate limiting, and maintaining consistent logging. It also makes monitoring more straightforward, ensuring your API environment remains secure as usage increases.

Next, consider adopting a Zero Trust security model. This involves implementing role-based access control (RBAC) to limit access based on user roles, ensuring every API request is both authenticated and authorized. Additionally, prioritize strong encryption for data, whether it’s in transit or at rest. Regular penetration testing and continuous vulnerability monitoring are also key to identifying and addressing potential risks before they become problems.

By following these practices, you not only protect sensitive data but also ensure compliance with evolving security requirements as your platform scales.